Professor Wang Xigen Conducts the Academic Research Session for the Visiting Students from Oxford

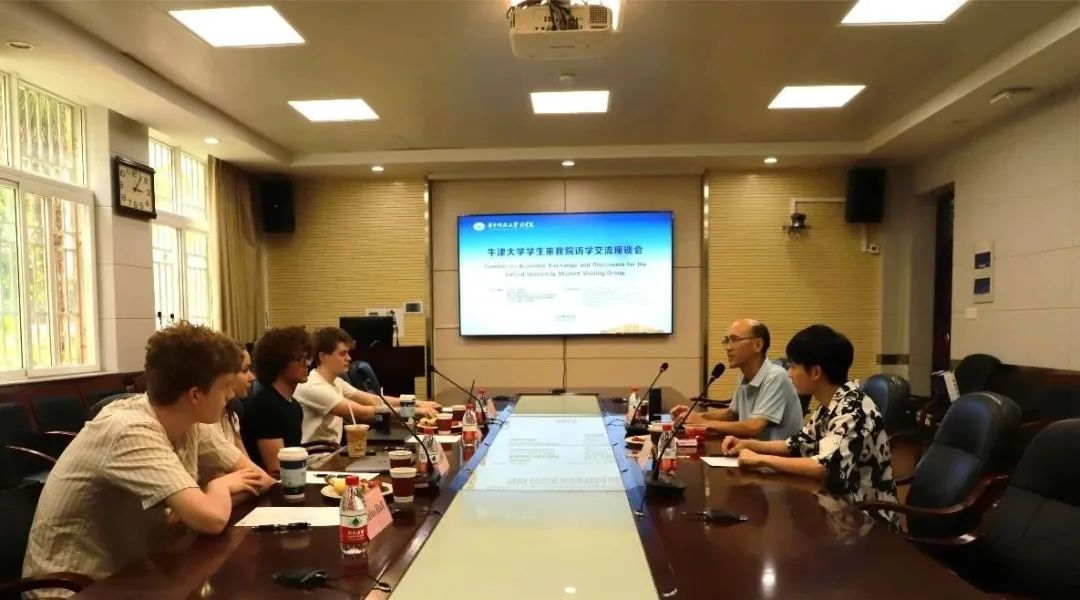

7月17日下午,华中科技大学法学院院长、国际数字法律协会创始会员、《数字法律评论》主编、湖北司法大数据研究中心负责人汪习根教授在华中科技大学法学院105教室为牛津大学来访学子举办专场课题研究指导会。四位来访学生对他们在学习和研究中所遇的问题进行了提问,汪习根教授详细、细致地回答了有关问题。国家人权与教育培训基地·华中科技大学人权法律研究院秘书王娜老师、博士生喻翰林列席参加。

On the afternoon of July 17th, Prof. Wang Xigen, Dean of the Law School of Huazhong University of Science and Technology, founding member of the International Digital Law Association, Editor-in-Chief of Digital Law Review, and Director of the Hubei Judicial Big Data Research Center, organized an Academic Research Session in the meeting room 105, law school, for the visiting students from Oxford. Four visiting students raised their questions about their study and research, Prof. Wang Xigen answered them detailly and carefully. Ms. Wang Na, secretary of the Institute of Human Rights Law of Huazhong University of Science and Technology, National Human Rights and Education Training Base and Ph.D. Candidate Yu Hanlin attended the session.

研讨期间,所有问答都紧紧围绕汪习根教授主持的科技部重大专项“新一代人工智能立法”这一主题展开。

All the questions and answers were revolved around the theme “New Generation Artificial Intelligence Legislation”, which was also the major project of the Ministry of Science and Technology, led by Prof. Wang Xigen.

Rufus Hall提出的问题

人类是否能信任人工智能?我在思考一些有关人工智能立法的实践问题,比如欧盟人工智能法案的实施,部分学者对相关法案进行了评论,人们也逐步意识到人工智能与隐私权保护等实践问题。您是否可以对我的研究进行指导和建议?

Questions from Rufus Hall:

How can human trust AI? I am thinking about some practical issues about AI legislation, like the implementation of EU AI Act, there are some commentaries on that Act, people are aware of the practical issues like privacy. Do you have any advice for my further research?

汪习根教授解答

首先,关于人类如何信任人工智能,这取决于立法是否足以处理争议,你可以对此进行进一步思考。同时,如何在法治框架下平衡人工智能的利与弊也是一个很好的话题。你可以用你专业的哲学知识对它进行价值分析。第二个关于法律实践的问题,我认为你可以在人权法领域做一些研究。就像你提到的,自由权和隐私权都是基本人权,如何在人权和人工智能的权利(如果人工智能拥有权利)之间划清界限来指导法律实践也是必要的。

Answer from Prof. Wang Xigen:

Firstly, about how can human trust AI, it depends on whether the legislation is enough to handle the disputes, you can think further about it. In the meanwhile, how to balance the advantage and disadvantage of AI within the framework of rule of law is also a really good topic. You can use your knowledge of philosophy to do the value analysis about it. Your second question is about law practice, I think you can do some research in the field of human right law. Like you mentioned, right of liberty and privacy both are the basic human rights, how to set the boundary between the human rights and the right of AI (if AI owns the right) to guide the legal practice is also necessary.

Luisa Mayr提出的问题

我注意到有一些关于欧盟人工智能法案的评论,有趣的是,不同的法域对此持不同的态度。如欧盟倾向于未雨绸缪,但英国更倾向于观望态度。受该法案的启发,我的研究主题是关于算法沙盒的。算法沙盒就像一个平台,每个人工智能产品在发布之前都应该通过沙盒测试。然而,立法如何如何信任沙盒仍在讨论之中。您对此有何评论?

Questions from Luisa Mayr:

I have noticed that there are some commentaries on EU AI Act, and it is interesting that deferent jurisdiction holds deferent attitudes towards it. Like EU is more like to do the pre-empty regulation, but the UK is more like to do the wait-and-see. Inspired from that Act, my research topic is about the sandbox. A sandbox is like a platform which every AI product should be tested before its publishment. However, how the sandbox legally trustworthy is under discussion. Do you have any comment on that?

汪习根教授解答

关于你的问题,如果沙盒与黑盒相同,其中的算法是不确定的,你应该考虑是谁设计了沙盒。以我个人观点来说,也许一些科学家或人工智能专家设计了沙盒,但我们法律专家对此有何贡献?这是你应该首要思考的核心问题。围绕这个核心问题,我建议你进一步思考以下三个问题:首先,人工智能是否拥有权利?这项权利的主体是什么?其次,如果人工智能拥有权利,那么人工智能的权利和责任的边界是什么?第三,如何划分人工智能权利与人的权利的界限及其责任?我认为这是一个非常有趣的话题,题目可以拟为:从技术到监管——制定一个公正、公平和可信的算法平台法律。此外,你可以想想政府和公司、政府和政府、公司和公司之间的关系如何处理,如何平衡管理和创新激励也很重要。

Answer from Prof. Wang Xigen:

About your question, if the Sandbox is the same as a Blackbox that the algorithm within is uncertain, you should think about who design the sandbox. From my perspective, maybe some scientists or some experts in AI designed that box, but how we law experts contribute on that? That is the first concern. About that concern, I suggest you think further about three questions: Firstly, does AI owns the right? What is the subject of that right? Secondly, if AI has the right, what is AI’s limitation of the right and responsibility? Thirdly, how to divide the boundary between the right of AI and human rights and its responsibility? I think that is a really interesting topic, and it could be framed like: From Technology to Regulation—to make a Justice, Fair and Trustful Algorithm Platform Legislation. Moreover, you can think about the relationship between government and company, government and government, company and company. How to balance management and innovation encouragement is also important.

Ned Chapman提出的问题

我一直在思考人工智能产品责任的相关问题。我现在正在思考的是:是否可以将人工智能产品规制融入到现有的民事责任体系中?或我们应该建立另一个新的体系?我在搜集的资料中注意到,一些学者认为我们可以把AI当作危险的饲养宠物,一些学者认为我们可以把AI当作一个法人。此中,我们人类如何从人工智能立法中受益?(如通过设立AI保险)同时,我做了一些案例分析研究,我在想我是否需要缩小我的研究范围至侵权法?

Questions from Ned Chapman:

I have been thinking about the AI product liability. The work I am doing now is that whether the AI product could be involved in the present civil liability regime, or we should build another new regime. Some scholars said we could treat AI like a dangerous pet, or some scholars said we could treat AI like a legal person. I am wondering how we humans could be benefit from the AI legislation, maybe through an insurance system. In the meanwhile, I have done some case study, doI have to narrow my research scope within the tort law?

汪习根教授解答

你选择的主题非常好,你学会了如何像学者一样思考问题。首先,关于人工智能产品责任,如果我们将其适用于现有的民事产品法律责任,还是会存在谁负责的问题。是生产者吗?设计者吗?销售者吗?人工智能本身?还是使用者?如果人工智能自己承担责任,它如何承担?我们又如何惩罚人工智能?你可以关注2018年发生在美国的第一个人工智能自动驾驶汽车案例。如果乘客(使用者)承担责任,是否过于苛刻?如果行人承担责任,是否不太公平?这都需要进一步的研究。对于你的研究范围,我认为更具体是必要的,这可以帮助你集中精力,明确研究方向。

Answer from Prof. Wang Xigen:

You have a really good topic, and I think you know how to think like an academic scholar. Firstly, about the AI product liability, if we bind it with the present product liability, who is responsible for it? Is that the producer? The designer? The seller? AI itself? Or the user? If AI itself takes the responsibility, how could it? How could we punish AI? You can pay attention to the first AI Autonomous Vehicle case happened in the USA in 2018. If the passenger(user) takes the responsibility, whether it is too harsh? If the pedestrian takes the responsibility, whether it is not fair? They all need further study. For your research scope, I think making it more specific is necessary, and it could help you focus.

Jack Bridgford提出的问题

由于我的专业是中文,所以我想对中国人工智能立法和英国人工智能立法进行比较研究。我现在关注的主题是关于人工智能法官的种族偏见问题,不知是否可以进行研究?

Questions from Jack Bridgford:

As my major is Chinese, I am thinking about doing a comparative study between Chinese AI Legislation and the UK AI legislation. The question that I focus now is about the racial bias of the AI judge, if it is possible to do that.

汪习根教授解答

比较研究是一个很好的研究方法。我很高兴你能在这里练习你的中文。我们在中国经常使用的学术数据库叫CNKI,你应该有访问途径。如果你只考虑英国和中国的比较研究,我认为你应该缩小你的研究主题。对于人工智能法官的种族偏见不是一个单一的问题,我认为你可以考虑地更宏观一点。种族偏见更多地与身份认同和算法歧视有关。此外,如何处理人工智能监管中的道德问题也应详细讨论。因此,你可以对中国有关的立法和英国有关的立法进行更多的梳理,从而更扎实地进行比较研究。

Answer from Prof. Wang Xigen:

Comparative study is a really good methodology. I am really glad that you can practice your major here. The database in China we often use is called CNKI, you might have the access. If you only think about the comparative study between the UK and China, I think you should narrow your topic. For the racial bias of AI judge is not a single problem, I think you could think bigger. The racial bias is more related to identity recognition and algorithmic discrimination. Furthermore, how to deal with the ethical issues in AI regulation is also under discussion. Therefore, you could do more review on the present China legislation and the UK legislation to do the comparative study in a more solid way.

研讨期间,轻松的气氛持续地弥漫着。工作人员悉心为指导会准备了咖啡和点心,研讨在欢乐和愉悦中结束。汪习根教授的回答为学生们指明了他们的研究思路,阐明了他们研究的创新之处,启发了他们的研究方向,所有的学生都收获颇丰。汪习根教授指出,优秀的学术文章将优先推荐至即将举行的“2024年数字法治与智慧司法国际研讨会”发言或《数字法律评论》集刊发表,欢迎同学们踊跃提交投稿。指导会接近尾声时,汪习根教授对牛津访学团学生在华中科技大学的生活表达了关切。同时,他建议同学们要紧跟人工智能立法领域的热点问题,密切关注不同国家(如欧盟、美国、英国、中国等)的最新动态,保持研究领域的专注。最后,汪习根教授向全体同学致以诚挚的祝福,并祝愿他们在中国有一个美好的经历。

During the session, the delighted mood hanged around. The session was served with coffee and snacks, ended with joy and pleasure. The answers from Prof. Wang Xigen indicated their research paths, clarified their research innovations, and inspired their research subjects. All the students gained a lot. Prof. Wang Xigen pointed out that the excellent academic articles will be preferently recommended to the upcoming "2024 Digital Rule of Law and Smart Justice International Conference" or published in the Digital Law Review, and students are welcome to contribute and submit. Close to end of the session, Prof. Wang Xigen concerned about the life in HUST of visiting students. In the meanwhile, he suggested all the students should keep abreast of hot issues in the AI legal field, pay close attention to the latest news in different countries about AI Legislation (the EU, the USA, the UK and China etc.), and stay focused. In the end, Prof. Wang Xigen delivered his sincere blessing to all the students and wish them to have a wonderful experience in China.